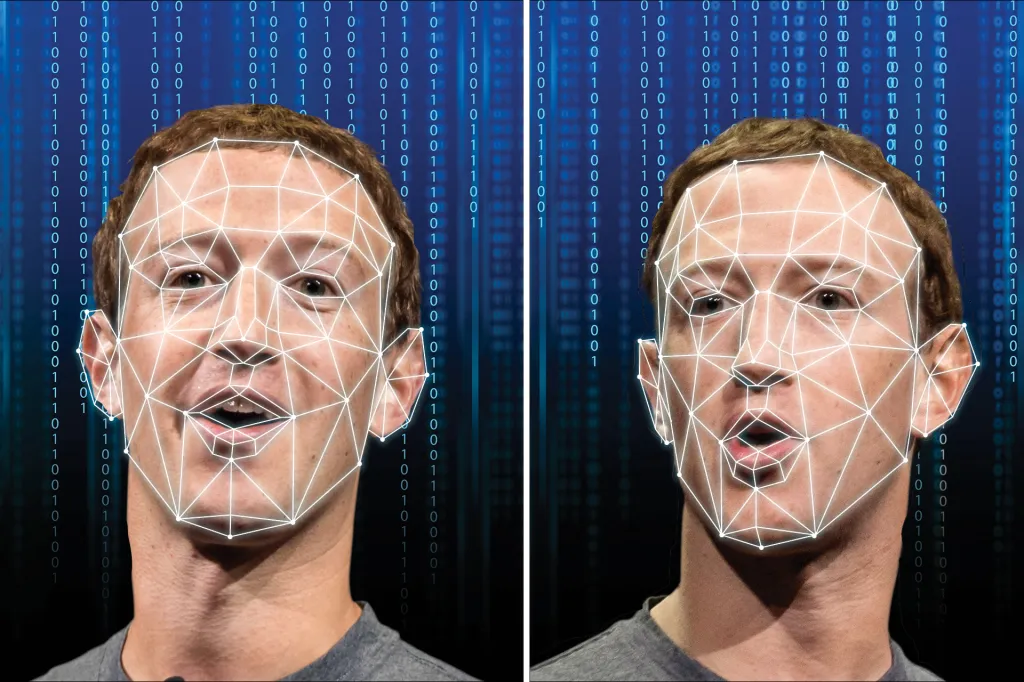

With the exponential growth of synthetic media, particularly deepfakes, the integrity of digital content has become increasingly threatened. Deepfakes—manipulated videos or audio clips generated using deep learning models such as Generative Adversarial Networks (GANs)—are capable of mimicking the appearance and voice of real individuals with alarming accuracy. This technology, while impressive, poses serious threats in misinformation, political manipulation, identity theft, and digital fraud. In response, the research community has focused extensively on the development of robust and scalable deepfake detection methods. Among the most promising approaches is the use of multimodal neural networks that integrate visual, audio, and textual cues to enhance detection accuracy.

The Need for Multimodal Approaches

Traditional deepfake detection models often rely on unimodal analysis, focusing on either visual frames (e.g., detecting facial inconsistencies) or audio signals (e.g., identifying artifacts in synthesized speech). However, these unimodal models are increasingly susceptible to adversarial attacks and generalization issues, especially when faced with novel deepfake generation techniques. Multimodal neural networks aim to overcome these limitations by simultaneously analyzing multiple data streams—typically video, audio, and transcripts—to make more informed and context-aware decisions.

The rationale is simple: deepfakes, no matter how realistic, often introduce minor inconsistencies across different modalities. For instance, a synthesized video might display mismatches between lip movements and audio or generate speech that does not align with natural linguistic structures. Multimodal models are better positioned to detect such cross-modal inconsistencies (Zhou et al., 2021).

Architecture of Multimodal Deepfake Detection Models

Modern multimodal deepfake detection architectures typically consist of three parallel pipelines for processing different modalities:

- Visual Modality: Leveraging convolutional neural networks (CNNs) or vision transformers (ViTs), the visual pipeline detects frame-level artifacts, unusual facial warping, inconsistent lighting, or unnatural eye blinking patterns—common hallmarks of video deepfakes (Afchar et al., 2018).

- Audio Modality: Using recurrent neural networks (RNNs), convolutional audio encoders, or spectrogram-based CNNs, this branch analyzes the speech signal for abnormal frequency patterns, waveform artifacts, or phase distortions introduced by synthesis models like WaveNet or MelGAN (Khalid et al., 2021).

- Textual Modality: If transcripts are available, natural language processing (NLP) models such as BERT or RoBERTa are used to assess semantic coherence, emotion, and syntactic structures, which may differ from those typically generated by human speakers.

The outputs from these pipelines are then fused—either via attention mechanisms or late fusion strategies—to make the final binary or probabilistic classification of real vs. fake content.

Cross-Modal Attention and Temporal Modeling

One of the breakthroughs in this field is the incorporation of cross-modal attention mechanisms. Inspired by transformers, these models allow the network to attend to relationships between different modalities—e.g., synchronizing audio with lip movements or matching speech content with facial expressions (Chen et al., 2022). This cross-attention increases sensitivity to subtle inconsistencies.

Moreover, the inclusion of temporal modeling—using 3D CNNs or bidirectional LSTM layers—enables the system to track temporal coherence across video frames, which is critical for detecting deepfakes that rely on frame-wise generation or motion smoothing.

Dataset and Benchmarking

Popular datasets used in multimodal detection include DFDC (Deepfake Detection Challenge), FaceForensics++, and FakeAVCeleb. These datasets contain aligned multimodal deepfake samples and serve as benchmarks for evaluating performance. Multimodal models have consistently outperformed unimodal baselines in these challenges. For instance, the winning solution in the Facebook DFDC competition utilized a multimodal architecture combining EfficientNet for visual processing with a temporal transformer and audio embeddings, achieving a detection AUC > 0.93 (Dolhansky et al., 2020).

Challenges and Future Directions

Despite promising results, several challenges remain. First, multimodal models are computationally expensive, making them less suitable for deployment on edge devices. Second, they are susceptible to domain shift—performance often degrades when tested on deepfakes generated using unseen architectures. Third, the lack of aligned, high-quality multimodal datasets limits generalization.

Future research should focus on developing lightweight and domain-agnostic architectures, as well as self-supervised pretraining on large unlabeled datasets to enhance robustness. Additionally, explainable AI (XAI) techniques are increasingly necessary to interpret decisions made by these black-box models, especially in high-stakes applications such as legal proceedings or journalism.

Conclusion

Deepfakes represent a formidable challenge in the digital age, but multimodal neural networks offer a highly promising line of defense. By integrating vision, audio, and text, these systems can detect cross-modal anomalies that elude unimodal approaches. As generative models become more sophisticated, so too must our detection frameworks evolve—multimodal architectures stand at the forefront of this critical battle for digital authenticity.

References

- Afchar, D., Nozick, V., Yamagishi, J., & Echizen, I. (2018). MesoNet: a Compact Facial Video Forgery Detection Network. WIFS.

- Zhou, P., Han, X., Morariu, V. I., & Davis, L. S. (2021). Two-Stream Neural Networks for Tampered Face Detection. CVPR.

- Khalid, M., Amin, M., Javed, A., & Mehmood, I. (2021). Audio Deepfake Detection: A Survey. arXiv preprint arXiv:2107.03417.

- Chen, L., He, Y., & Liu, Y. (2022). Cross-modal Attention Networks for Deepfake Detection. ICASSP.

- Dolhansky, B., et al. (2020). The Deepfake Detection Challenge Dataset. arXiv:2006.07397.