Surveillance capitalism—a term coined by Harvard professor Shoshana Zuboff—refers to the commodification of personal data through surveillance technologies, primarily by powerful tech corporations. In the age of artificial intelligence (AI), this practice has evolved and deepened in complexity, posing urgent ethical questions. AI systems feed on massive datasets, much of which are harvested through opaque mechanisms from users who often remain unaware of the extent of their digital exposure. The integration of AI into surveillance capitalism exacerbates concerns about autonomy, consent, privacy, and democratic control.

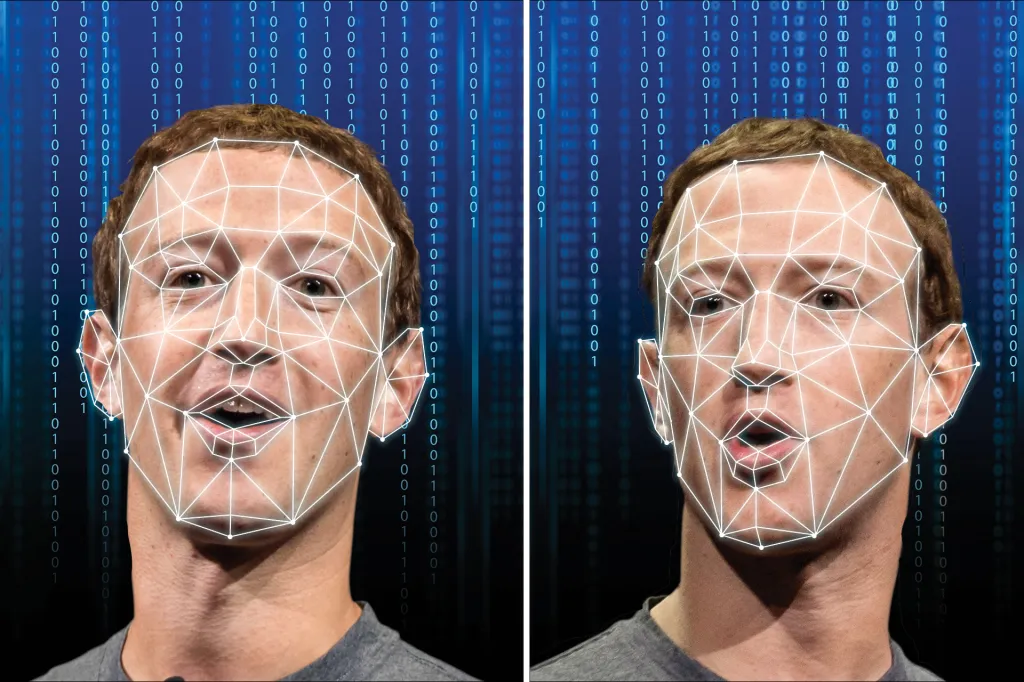

One of the primary ethical concerns lies in informed consent. Most digital platforms collect user data under vague terms of service agreements, which users rarely read or understand. AI-driven systems amplify this opacity, learning patterns and predicting behaviors based on not just the data users willingly share, but also from inferred, shadow data. This raises the question: can consent ever be truly informed when the mechanisms of data extraction and inference are inherently inscrutable to the average user (Zuboff, 2019)?

Additionally, algorithmic profiling can result in discrimination and social stratification. AI tools often reinforce existing societal biases because they are trained on historical data embedded with prejudice. Surveillance capitalism deploys these biased algorithms to target users with content, advertisements, and even financial services, potentially leading to digital redlining—where certain communities are excluded from opportunities due to algorithmic predictions (Eubanks, 2018).

Another ethical concern is the erosion of privacy as a fundamental right. In AI-powered surveillance capitalism, privacy is no longer viewed as a default human condition but a privilege afforded selectively. Continuous tracking through smart devices, apps, and interconnected platforms means individuals are perpetually surveilled in both public and private spheres. This continuous monitoring creates a “panopticon effect,” where people alter their behavior due to perceived surveillance, threatening individual autonomy and freedom (Lyon, 2018).

Furthermore, democratic institutions are undermined when data monopolies accumulate unprecedented power. Companies like Google, Meta, and Amazon not only influence consumption patterns but also shape public opinion, political discourse, and even electoral outcomes through microtargeting and behavioral nudging. AI technologies make this influence more subtle, scalable, and effective. Without accountability mechanisms, this concentration of influence shifts power away from the public and elected institutions toward unregulated private actors.

To address these ethical dilemmas, a multi-pronged response is essential. Regulatory frameworks such as the EU’s General Data Protection Regulation (GDPR) and the proposed AI Act provide templates for ensuring data rights and transparency. Ethical AI principles, such as fairness, accountability, and explainability, should be embedded into the design and deployment phases of AI systems. Moreover, public awareness and digital literacy must be enhanced to empower individuals to take control of their digital lives.

In conclusion, surveillance capitalism in the AI era represents a profound ethical challenge. As AI technologies evolve, so too must our commitment to safeguarding human rights, ensuring democratic oversight, and reimagining data practices grounded in transparency and consent. Without a rigorous ethical response, the age of AI risks becoming an era of digital domination rather than digital empowerment.

References

- Zuboff, S. (2019). The Age of Surveillance Capitalism. PublicAffairs.

- Eubanks, V. (2018). Automating Inequality: How High-Tech Tools Profile, Police, and Punish the Poor. St. Martin’s Press.

- Lyon, D. (2018). The Culture of Surveillance: Watching as a Way of Life. Polity.